DNG, a digital negative, was designed to hold the RAW sensor data from your camera. This post is specifically focused on the RAW data from the FS700 via the Odyssey 7Q. Both the 2K and 4K RAW but should apply to other cameras.

Inside cDNG Files

DNG, a digital negative, was designed to hold the RAW sensor data from your camera. This post is specifically focused on the RAW data from the FS700 via the Odyssey 7Q. Both the 2K and 4K RAW but should apply to other cameras. cDNG is cinema DNG which is a superset of the DNG standard and it’s becoming more common with support increasing all the time - although when it comes to valid workflows it’s the wild-west out there.

![[fb]](https://www.ome.media/media/007200000003C/59a05fb59e639a0001cb22dd_debayermatrix.jpg)

Each DNG contains the data from the camera before debayering (hence RAW data), this means that each application supporting DNG can offer a myriad of different ways to debayer (or ‘develop’) this data - each route can produce a different image. The image here shows the differences between some common algorithms. Some applications don’t give you any choices, and they have their own proprietary debayer approach. The DNG file also contains meta data about the image and the camera. This data includes details about colour matrixes, white balance, black and white points and information needed to recreate what the camera saw. The key to a DNG is it is self describing.

![[fb]](https://www.ome.media/media/007200000003C/59a05fbde70cd600015f2099_bayered.jpg)

The image to the left shows a prebayered image, with the bayer patterning still intact. You can see it is basically a grey scale image from the sensor. Below that is the kind of image that comes from the data, it's linear data which means that it's very dark save for the highlights (you can just about see the edge of the aerial) - more on this later. There are myriad of excellent resources around to go into the details of debayering so there’s no point doing that here.

The next blog post will deal with Linear Light and why it's so important when grading or using VFX

Developing a DNG

Lets look at how a DNG file is 'developed' into a ‘real world’ image

The first step is linerisation of the data held in the file. A linear image is a very simple but accurate representation of the real world light values in a scene. The ultimate output from a DNG is a linear image from which the application can further alter. Linear, or more accurately 'Scene Linear', means a doubling of value is a doubling of light. Camera sensors are generally linear in their nature except for the extremes (shadows and highlights).

This linerasation step depends on a number of factors. Is the source camera data stored inside the DNG actually linear as well? If so then the process is very simple. Apply the white and black point and scale to fit into 16 bit.

Usually cameras don’t output 0 for black, the value is raised to allow us to keep the sensor noise. If we kept black as 0 this noise could create negative black values - and so part of the linearastion process makes anything below the black level remap to 0. Within some RAW developing applications, Aperture, Lightroom, ACR, you can tweak these values visually, you can push the black point up to see more details sometimes. However the metadata in the file should tell the application what the recommended levels are.

If the cameras' data isn’t stored as linear - for example the Black Magic Cinema camera - then each individual DNG can include a LUT (Look Up Table) that helps unbend the stored information back into scene linear. BMC have taken the sensor data and given it a compression curve before saving the data into the DNGs, the sensor itself is probably linear but what is stored in the 12 bit DNG isn’t linear, it has a better spread of values. This is a good thing. The Alexas' ArriRaw format is also 12 bit but encoded as log allowing for a huge dynamic range.

The O7Q files, as well as the Digital Bolex (DBX) files have no linearsation LUTs and therefore the suggestion is that they are linear (in the case of the DBX this is confirmed as well)

The BMC, O7Q and DBX use 12 bit files. That means they store code values (CV) from 0 to 4096. However once black point is applied (in the case of the FS700 this is 256), it means that total data ranges from 0 to 3840.

![[fb]](https://www.ome.media/media/007200000003C/59a05fd7730ab500018894f7_fourchannels.jpg)

The next step is the debayering process. A bayer filter can have a few different arrangements. The metadata within the file again informs the application how the data is arranged so the application can generate a single image from the different colour pixels. In the case of the O7Q DNGs the pattern is RG GB, so two green pixels for every red and blue - this is pretty normal. This debayering process is open to all sorts of optimisations and that’s why each application can produce different results from the same RAW file. The image above shows the 4 channels from an example DNG. The source image is night time, quite dark but with the overhead lights clipping.

![[fb]](https://www.ome.media/media/007200000003C/59a05fe1730ab500018894fd_basicdebayer.jpg)

![[fb]](https://www.ome.media/media/007200000003C/59a05feb2b6ed30001131f6f_basicdebayerlifted.jpg)

The final step is applying the white balance and colour matrixes. Each bayer sensor has a different type of colour filter, depending on camera and manufacturer. These colours need to be corrected and the final image needs to end up in XYZ space, which is a very large generic colourspace. Most bayer sensors have more greens than red or blue. It is also possible for each alternative row of green to have a slightly different type of green. There’s a metadata parameter called Bayer Green Split which helps the application determine how different each row might be. The white balance handles adjusting the RGB channels so that white is white for whatever the camera setting is. The metadata contains various types of colour parameters to tell the application about the spectral measurements of these filters which in turn help to transform the colour into real world colour at the end.

![[fb]](https://www.ome.media/media/007200000003C/59a05ff59e639a0001cb22f8_basicpostcolour.jpg)

The sequence of images above show the colour work that happens. The top most image shows how dark a linear image really looks. The image under has that brightened so you can see the basic colour image out of a RAW camera is usually green. This is because the green channel is more sensitive, so brighter. The last image shows after colour matrixing, the colours actually seen. We'll come back to the matrixing step in future articles because from the RAW data it is possible to white balance and apply colour transformations that can quite radically alter the look of the image.

How does this work for the FS700?

For the FS700 this poses a bit of a question mark. I am not privy to what Sony or Convergent Design is doing so what i’m writing here is based on observation and basic math. I have no insider knowledge (but you know if someone wants to correct me, i’m more than happy to listen and learn). Odd situation because Sony call the RAW out of the FS700 12 bit Linear RAW.

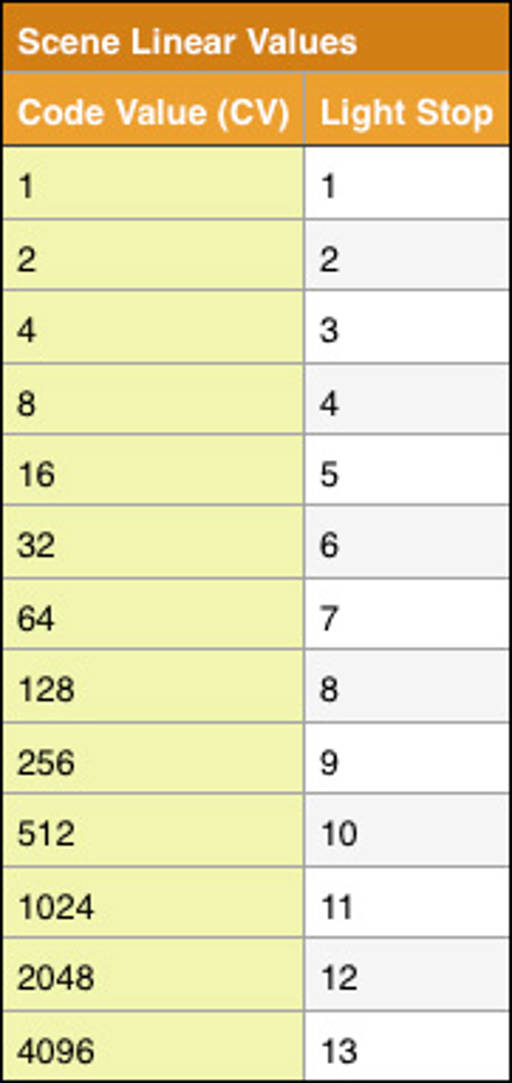

If this was truly simple 12 bit Linear data then the absolute maximum range the camera could record would be 12 stops, but that’s technical range. Because when light is represented with just 12bits (values from 0 to 4096) in a linear fashion it means that the last 4 stops of light would have to be represented by actual data values between 0 and 15. So 15 values for all the shadows? See the table showing the distribution of CV in a true Linear file.

As an aside, when you are testing for these step values you must do so on the raw image data, not the data after debayer and colour matrixing, if you do it after this then there will be more steps in the shadow range because the values you are testing are then based on combinations of RGB. When looking at these raw values you should choose a colour channel to focus on based on the scene. Outside there will be more blue, inside more red and green is a more sensitive channel overall.

It would be possible to get around this by encoding the source signal from the FS700 in Slog and indeed, you need to set the camera to Slog for it to work properly. But Sony call it Linear RAW, not Log. They’re pretty insistent on that too.

Why do I care? Well if shadow performance is compromised then we need to know this for exposure reasons, to avoid the very low end or to expose with this knowledge. If we understand how our camera works then we can make the best use of it. Frankly i have no issue with 11 or 12 stops either, more than happy with that too, if they are usable stops. But i do want to know when i can raise and lower values without quantisation or linearisation problems.

So some investigation is needed.

![[fb]](https://www.ome.media/media/007200000003C/59a06005fcf08500017d98fc_rawdigger.jpg)

Adobe have a useful command line utility called dngvalidate, using this i can open those DNGs and output the various steps of processing. There is also lovely little application called RawDigger which allows for all sorts of poking around in these files.

What i want to see first is whether the output of the camera is Linear.

I’d add a caveat here because i’m not getting the original Sony RAW here, i’m getting a version of it that has been decrypted and transcoded to DNG via the O7Q. So my observations are based on the resulting DNGs, perhaps the Sony RAW versions of the files (if i had them) would be different, although i would doubt it.

I can see that the files are 12 bit for sure, values from 0 to 4096. There was some confusion for me because i did look at the files in Photoshop after exporting them as 16 bit tiffs from dng_validate. I was convinced the files were 11 bit for ages until i realised that Adobe use 15 bit math for 16 bit files, and the eyedroppers in the Adobe range use 0 to 16384 for 16 bit files. Cue forehead slapping moment and apologies to anyone i was ranting about this too. Especially the wonderful tech guys at Convergent Design who must be sick of me by now.

I tried a number of approaches to confirm the results i was seeing. I did one which involved shooting a macbeth through the range of a lens and ND, from f2.8 to f22 and then ND1 to 3. This gave a large dynamic range to test with but i wasn’t convinced the lens was totally accurate with it’s aperture settings. Tim Dashwood did a test using stacked bits of ND film, from 1 to 17 stops worth. This is a great test and the DNG is downloadable from his site. Finally i used a step wedge with 41 steps in 1/3 stop increments. This seemed the best choice and it’s the same step wedge that dpreview use for their camera reviews. So a comparison can be made between the FS700 and the world of dSLRs.

![[fb]](https://www.ome.media/media/007200000003C/59a0600ffcf08500017d9900_wedgeview.jpg)

The graph for this can be seen below, the values have a log axis which shows the doubling of value as a straight line - it's much easier to see this. I think the important thing to draw from it is that it really does look linear, the stop values are exhibiting a halving of data. These data levels are taken before colour matrixing.

![[fb]](https://www.ome.media/media/007200000003C/59a060184eb22e0001dd17e6_rawgraph.jpg)

In this simple test i plotted the separate R,G and B values to get this curve, just to see. In RAW Digger i selected each wedge and noted the average values (i only took one of the green channels). The graph clearer shows that the green is more sensitive all over and is the first channel to clip.

![[fb]](https://www.ome.media/media/007200000003C/59a060209e639a0001cb2315_actualvalues.jpg)

This is really important, especially when we plot the CV (Code Values) for the ranges. It is true the shadows are basically linear and all the data for the shadows is held in very few CV - the lower 4 stops are held in just 10 integer values. Conversely there is a huge amount of information in those brightest stops as well, the brightest 2 hold nearly 3000 values, what a waste! The graph is showing average values over an area which is why there are decimal places, and this shows the effect of noise on the image - without the noise it would be more banded. I stopped counting at the 38th wedge, i really could not discern any detail in the noise at that point.

The results of this graph should have an effect on how we choose to expose our scenes.

Am i saying that the RAW is compromised?

No, not at all, this is just Linear RAW at work.

I don’t know what the FS700 is actually capable of, i don’t know how good the sensor really is or how good the supporting hardware is. I must assume that the choice of 12 bit output was considered a balancing act of price and performance. And it is, i don’t know of any other camera in this price range with this performance and i am very very happy with the 700 and O7Q combination.

However, if the output of the camera was, for example, 14 bit and the supporting hardware was based around that then what that would actually change is that those bottom four stops, which are currently being represented by CV 0 to 15, would be represented by CV 0 to 63, quite an improvement in detail. However there’s a chance that in reality it would all be noise because the sensor isn’t capable of delivering the discreet levels at that range. Or this could be the main difference between the 700 and the F5 - which is 14 bit.

To me this explains some of the shadow issues i’ve seen on the 700 even before RAW, there have always been shadow issues and i guess this is down to the ADC hardware inside. That’s fine. We just need to know.

Comparing RAW to Developed

Here are a couple of examples showing a RAW image before any work is done and it's untouched raw code values in the file and then a developed version of the same file, so you can see how the code values are converted into full RGB values and how the linearity of the original changes

![[fb]](https://www.ome.media/media/007200000003C/59a0602b9e639a0001cb231c_rawToDeveloped01.jpg)

Some Conclusions

How does this change things for me? It means that if i am using RAW (and we’ll get on to a comparison between Slog and RAW in another post) then because of the linear nature i can expose a bit higher and then simply take that down in post to basically ‘free up’ more shadow detail.

I think this also highlights (pun intended) the dynamic range arms race, much like the megapixel race. Because there is a subtle curve on the shadows then the dynamic range of the recorded image could be >12 stops, but it’s simply not usable or real data. In Tim Dashwoods test there are 17 stops of wedges there and you can actually make out all 17, but the last 6 seem much the same. They’re just not really there, it's like the camera never truly records black. 11 or 12 stops is plenty to record in most situations. I’d love to be able to try the same things with other cameras because i feel that when you get to the nitty gritty they are going to be the same too. This is technical range vs the usable range. Both DBX and the new Cion are claiming ‘only’ 12 stops, which is great because they are probably real stops. I'm sure many will be up in arms claiming 14 stops or whatever, i'm really sorry, it just doesn't seem to be there, the data says so. Have i made mistakes? Possibly, the step wedge could be incorrect although i did a few other versions to sanity check the results so i'm pretty comfortable that it is okay. I believe there are some other range tests too, one from Xyla if memory serves, maybe they'd give different results.

One advantage to this single step wedge is that you can compare these wedge results with existing dSLRs and you’ll be surprised at how good the FS700 is in the real world - none of these stills cameras are really claiming 14 stops of range and none of them have.

However...

Hidden Range, Exposure and Colour Sensitivities

We can see from the raw data that Green is so much more sensitive than the others. The graph at the top shows where the O7Q monitor showed clipping (actually a bit above this) 3 wedges in. I’ve looked at similar outputs from the BMC and DBX and green is always more sensitive, but for the FS700 it is 40-50% more which seems higher than the other cameras. I assume this is on purpose to give increased dynamic range. I’m speculating here, but what i do know is how it affects highlight handling.

![[fb]](https://www.ome.media/media/007200000003C/59a0603b2b6ed30001131f9d_highlights.jpg)

Look at this same wedge where i have just clipped brightest steps, I could see on the O7Q monitor image that the range is clipping at the same point as CinemaDNG which is what i would expect to see - the waveform on the O7Q shows the RGB values after colour matrixing (it would be great as an option to see them before). Because green clips first (see the graph above), the image has ‘technically' clipped, however in that raw data there is additional information in the less sensitive red and blue channels that hasn’t clipped and is quite detailed too.

I’ve often wondered what highlight recovery does and the resolve checkbox ‘highlight’. As far as i can tell it extends the detail in the highlights by filling the clipped green channel with data from the red and blue channels. You can do this in the knowledge that highlights roll off to white. However in some cases this can produce magenta artefacts in extreme highlights and that’s why. The BMDFilm option allows for highlight recovery, whereas the CinemaDNG doesn't. It's pretty different? That's a whole stop more range from the RAW file. The Slog2 file from the O7Q is clipped at the same point as the CinemaDNG. I hasten to add that BMDFilm is not an ideal option to work in either, more on that in a different post.

I think this is important to remember. The RAW data can be reconstructed in a number of ways, not just filling in the missing pixels but also the way the colour is processed. When you have a file direct from the camera, be it REC709 or Slog2 then that’s it - those choices have been made and baked in. The DNGs do allow for a degree of flexibility here and it’s possible to squeeze a bit more out for some edge cases. Of course is it worth the effort is a very valid question.

You could recover some highlights in Slog2, but because the colour channels have been white balanced then the degree of clipping one may have compared to another isn’t going to be as great as there is in the RAW. So the resultant recovery process wouldn’t generally be quite so different.

It also means that from a workflow point of view there are numerous approaches, all of which may (can) produce different results. This is a subject for the next blog post too

Exposing RAW

From an exposure viewpoint you really want to expose as far to the right as possible bearing in mind if you are shooting narrative drama then you don’t want to be exposing differently shot by shot. Or if you do decide to do that give the colourist a fighting chance by shooting a grey card before each shot so there is something consistent to balance with. Of course if you’re changing ISO to get the best for a particular scene you can end up with different noise patterns from shot to shot and that’s probably not a good thing.

Is there a guideline on how to expose? I think it depends on your preference and scene. Remember that the top two stops hold 3/4 of the Code Values, but as they appear to be a little non linear, so simply pulling them down might not be perfectly accurate. However the non linearity is pretty minor so visually you might be able to get away with it.

So in a given scene you can expose higher and bring it down in post to gain more shadow detail if that is what you are after - can you afford to loose a stop at the top end? Be especially mindful of skin tones with highlights on. One of the benefits of RAW and all this headroom is that the roll off on skin looks natural and pleasing.

![[fb]](https://www.ome.media/media/007200000003C/59a060464eb22e0001dd17fe_whitebalance-comparison.jpg)

White Balance

There was some suggestion that white balance was baked into the output of the camera, but i cannot see that. It appears that the data in the DNGs is the same regardless of what the white balance setting is. I shot a macbeth in the same 3200K light and shot it with WB as 3200 and 5600K. The output of the 5600K gives a warm image as i would expect but the raw data for it appears the same.

Colour Processing

This is an area which is a bit strange to me, i am writing a post on how the colours get translated but from an observation point I found the Reds generated by the camera are really not quite right. I’ve done a number of tests, including comparing RAW output from Sony stills cameras as well. My conclusion is that the RAW output is correct and the super saturated reds in this case is an interaction between a particular application and the data within certain colourspaces. This is quite a big topic so i'm expanding on this as another blog post soon.

In brief however, where things do get sticky is the workflow side of things where it is very easy to push the RAW into colourspaces with incorrect colours, incorrect gamma and clipping issues.

I think the accepted approaches of using CinemaDNG in Resolve for example aren’t ideal at all, the colours don’t appear totally right there. The BMDFilm is an interesting option but by Black Magics own admission it is tweaked for the colorimetry of their own cameras. The Rec709/Linear route seems okay and the P3 route, done correctly, appears to yield good results. In reality the route by which you extract colour from these DNGs largely depends on your forward post route and what you need. There’s no one size fits all at the moment.

Up and coming blog posts will deal in more detail with Workflow options and also attempt to demonstrate why grading in baked in colourspaces (like customised Rec709 gamma curves) isn’t always a good idea.

Please feel free to contact me if you have any comments. This, and other posts, have been as a result of weeks, months of ripping apart DNGs and understanding multiple workflows for our current feature. There's plenty more to come.